Introduction

“This hot new field promises to revolutionize industries from business to government, health care to academia,” says the New York Times. People have woken up to the fact that without analyzing the massive amounts of data that’s at their disposal and extracting valuable insights, there really is no way to successfully sustain in the coming years.

Touted as the most promising profession of the century, data science needs business savvy people who have listed data literacy and strategic thinking as their key skills. Anjul Bhambri, VP of Architecture at Adobe, says, “A Data Scientist is somebody who is inquisitive, who can stare at data and spot trends. It’s almost like a Renaissance individual who really wants to learn and bring change to an organization.” (She was previously IBM’s VP of Big Data Products.)

How do we get value from this avalanche of data in every sector in the economy? Well, we get persistent and data-mad personnel skilled in math, stats, and programming to weave magic using reams of letters and numbers.

Over the last few years, people have moved away from the umbrella term, data scientist. Companies now advertise for a diverse set of job roles such as data engineers, data architects, business analysts, MIS reporting executives, statisticians, machine learning engineers, and big data engineers.

In this post, you’ll get a quick overview about these exciting positions in the field of analytics. But do remember that companies often tend to define job roles in different ways based on the inner workings rather than market descriptions.

List of Job Roles in Data Science / Big Data

1. MIS Reporting Executive

Business managers rely on Management Information System reports to automatically track progress, make decisions, and identify problems. Most systems give you on-demand reports that collate business information, such as sales revenue, customer service calls, or product inventory, which can be shared with key stakeholders in an organization.

Skills Required:

MIS reporting executives typically have degrees in computer science or engineering, information systems, and business management or financial analysis. Some universities also offer degrees in MIS. Look at this image from the University of Arizona which clearly distinguishes MIS from CS and Engineering.

Roles & Responsibilities:

MIS reporting executives meet with top clients and co-workers in public relations, finance, operations, and marketing teams in the company to discuss how far the systems are helping the business achieve its goals, discern areas of concern, and troubleshoot system-related problems including security.

They are proficient in handling data management tools and different types of operating systems, implementing enterprise hardware and software systems, and in coming up with best practices, quality standards, and service level agreements. Like they say, an MIS executive is a “communication bridge between business needs and technology.”

2. Business Analyst

Although many of their job tasks are similar to that of data analysts, business analysts are experts in the domain they work in. They try to narrow the gap between business and IT. Business analysts provide solutions that are often technology-based to enhance business processes, such as distribution or productivity.

Organizations need these “information conduits” for a plethora of things such as gap analysis, requirements gathering, knowledge transfer to developers, defining scope using optimal solutions, test preparation, and software documentation.

Skills Required:

Apart from a degree in business administration in the field of your choice, say, healthcare or finance, aspiring business analysts need to have knowledge of data visualization tools such as Tableau and requisite IT know-how, including database management and programming.

You could also major in computer science with additional courses that include statistics, organizational behavior, and quality management. Or you could get professional certifications such as the Certified Business Analysis Professional (CBAP®) or PMI Professional in Business Analysis (PBA). Many universities offer degrees in business intelligence, business analytics, and analytics. Check out the courses in the U.S/India.

Roles & Responsibilities:

Business analysts identify business needs, crystallizing the data for easy understanding, manipulation, and analysis via clear and precise requirements documentation, process models, and wireframes. They identify key gaps, challenges, and potential impacts of a solution or strategy.

In a day, a business analyst could be doing anything from defining a business case or eliciting information from top management to validating solutions or conducting quality testing. Business analysts need to be effective communicators and active listeners, resilient and incisive, to translate tech speak or statistical analysis into business intelligence.

They use predictive, prescriptive, and descriptive analysis to transform complex data into easily understood actionable insights for the users. A change manager, a process analyst, and a data analyst could well be doing business analysis tasks in their everyday work.

3. Data Analyst

Unlike data scientists, data analysts are more of generalists. Udacity calls them junior data scientists. They play a gamut of roles, from acquiring massive amounts of data to processing and summarizing it.

Skills Required:

Data analysts are expected to know R, Python, HTML, SQL, C++, and Javascript. They need to be more than a little familiar with data retrieval and storing systems, data visualization and data warehousing using ETL tools, Hadoop-based analytics, and business intelligence concepts. These persistent and passionate data miners usually have a strong background in math, statistics, machine learning, and programming.

Roles & Responsibilities:

Data analysts are involved in data munging and data visualization. If there are requests from stakeholders, data analysts have to query databases. They are in charge of data that is scraped, assuring the quality and managing it. They have to interpret data and effectively communicate the findings.

Optimization is must-know skill for a data analyst. Designing and deploying algorithms, culling information and recognizing risk, extrapolating data using advanced computer modeling, triaging code problems, and pruning data are all in a day’s work for a data analyst. For more information about how a data analyst is different from a data scientist.

4. Statistician

Statisticians collect, organize, present, analyze, and interpret data to reach valid conclusions and make correct decisions. They are key players in ensuring the success of companies involved in market research, transportation, product development, finance, forensics, sport, quality control, environment, education, and also in governmental agencies. A lot of statisticians continue to enjoy their place in academia and research.

Skills Required:

Typically, statisticians need higher degrees in statistics, mathematics, or any quantitative subject. They need to be mini-experts of the industries they choose to work in. They need to be well-versed in R programming, MATLAB, SAS, Python, Stata, Pig, Hive, SQL, and Perl.

They need to have strong background in statistical theories, machine learning and data mining and munging, cloud tools, distributed tools, and DBMS. Data visualization is a hugely useful skill for a statistician. Aside from industry knowledge and problem-solving and analytical skills, excellent communication is a must-have skill to report results to non-statisticians in a clear and concise manner.

Roles & Responsibilities:

Using statistical analysis software tools, statisticians analyze collected or extracted data, trying to identify patterns, relationships, or trends to answer data-related questions posed by administrators or managers. They interpret the results, along with strategic recommendations or incisive predictions, using data visualization tools or reports.

Maintaining databases and statistical programs, ensuring data quality, and devising new programs, models, or tools if required also come under the purview of statisticians. Translating boring numbers into exciting stories is no easy task!

5. Data Scientist

One of the most in-demand professionals today, data scientists rule the roost of number crunchers. Glassdoor says this is the best job role for someone focusing on work-life balance. Data scientists are no longer just scripting success stories for global giants such as Google, LinkedIn, and Facebook.

Almost every company has some sort of a data role on its careers page.Job Descriptions for data scientists and data analysts show a significant overlap.

Skills Required:

They are expected to be experts in R, SAS, Python, SQL, MatLab, Hive, Pig, and Spark. They typically hold higher degrees in quantitative subjects such as statistics and mathematics and are proficient in Big Data technologies and analytical tools. Using Burning Glass’s tool Labor Insight, Rutgers students came up with some key insights after running a fine-toothed comb through job postings data in 2015.

Roles & Responsibilities:

Like Jean-Paul Isson, Monster Worldwide, Inc., says, “Being a data scientist is not only about data crunching. It’s about understanding the business challenge, creating some valuable actionable insights to the data, and communicating their findings to the business.” Data scientists come up with queries.

Along with predictive analytics, they also use coding to sift through large amounts of unstructured data to derive insights and help design future strategies. Data scientists clean, manage, and structure big data from disparate sources. These “curious data wizards” are versatile to say the least—they enable data-driven decision making often by creating models or prototypes from trends or patterns they discern and by underscoring implications.

6. Data Engineer/Data Architect

“Data engineers are the designers, builders and managers of the information or “big data” infrastructure.” Data engineers ensure that an organization’s big data ecosystem is running without glitches for data scientists to carry out the analysis.

Skills Required:

Data engineers are computer engineers who must know Pig, Hadoop, MapReduce, Hive, MySQL, Cassandra, MongoDB, NoSQL, SQL, Data streaming, and programming. Data engineers have to be proficient in R, Python, Ruby, C++, Perl, Java, SAS, SPSS, and Matlab.

Other must-have skills include knowledge of ETL tools, data APIs, data modeling, and data warehousing solutions. They are typically not expected to know analytics or machine learning.

Roles & Responsibilities:

Data infrastructure engineers develop, construct, test, and maintain highly scalable data management systems. Unlike data scientists who seek an exploratory and iterative path to arrive at a solution, data engineers look for the linear path. Data engineers will improve existing systems by integrating newer data management technologies.

They will develop custom analytics applications and software components. Data engineers collect and store data, do real-time or batch processing, and serve it for analysis to data scientists via an API. They log and handle errors, identify when to scale up, ensure seamless integration, and “build human-fault-tolerant pipelines.” The career path would be Data Engineer?Senior Data Engineer?BI Architect?Data Architect.

7. Machine Learning Engineer

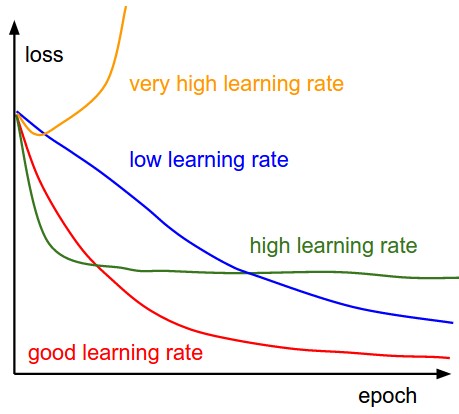

Machine learning (ML) has become quite a booming field with the mind-boggling amount of data we have to tap into. And, thankfully, the world still needs engineers who use amazing algorithms to make sense of this data.

Skills Required:

Engineers should focus on Python, Java, Scala, C++, and Javascript. To become a machine learning engineer, you need to know to build highly-scalable distributed systems, be sure of the machine learning concepts, play around with big datasets, and work in teams that focus on personalization.

ML engineers are data- and metric-driven and have a strong foundation in mathematics and statistics. They are expected to have experience in Elasticsearch, SQL, Amazon Web Service, and REST APIs. As always, great communication skills are vital to interpret complex ML concepts to non-experts.

Roles & Responsibilities:

Machine learning engineers have to design and implement machine learning applications/algorithms such as clustering, anomaly detection, classification, or prediction to address business challenges. ML engineers build data pipelines, benchmark infrastructure, and do A/B testing.

They work collaboratively with product and development teams to improve data quality via tooling, optimization, and testing. ML engineers have to monitor the performance and ensure the reliability of machine learning systems in the organization.

8. Big Data Engineer

What a big data solutions architect designs, a big data engineer builds, says DataFloq founder Mark van Rijmenam. Big data is a big domain, every kind of role has its own specific responsibilities.

Skills Required:

Big data engineers, who have computer engineering or computer science degrees, need to know basics of algorithms and data structures, distributed computing, Hadoop cluster management, HDFS, MapReduce, stream-processing solutions such as Storm or Spark, big data querying tools such as Pig, Impala and Hive, data integration, NoSQL databases such as MongoDB, Cassandra, and HBase, frameworks such as Flume and ETL tools, messaging systems such as Kafka and RabbitMQ, and big data toolkits such as H2O, SparkML, and Mahout.

They must have experience with Hortonworks, Cloudera, and MapR. Knowledge of different programming and scripting languages is a non-negotiable skill. Usually, people with 1 to 3 years of experience handling databases and software development is preferred for an entry-level position.

Roles & Responsibilities:

Rijmenam says “Big data engineers develop, maintain, test, and evaluate big data solutions within organizations. Most of the time they are also involved in the design of big data solutions, because of the experience they have with Hadoop[-]based technologies such as MapReduce, Hive, MongoDB or Cassandra.”

To support big data analysts and meet business requirements via customization and optimization of features, big data engineers configure, use, and program big data solutions. Using various open source tools, they “architect highly scalable distributed systems.” They have to integrate data processing infrastructure and data management.

It is a highly cross-functional role. With more years of experience, the responsibilities in development and operations; policies, standards and procedures; communication; business continuity and disaster recovery; coaching and mentoring; and research and evaluation increase.

Summary

Companies are running helter-skelter looking for experts to draw meaningful conclusions and make logical predictions from mammoth amounts of data. To meet these requirements, a slew of new job roles have cropped up, each with slightly different roles & responsibilities and skill requirements.

Blurring boundaries aside, these job roles are equally exciting and as much in demand. Whether you are a data hygienist, data explorer, data modeling expert, data scientist, or business solution architect, ramping up your skill portfolio is always the best way forward.

Look at these trends from Indeed.com

If you know exactly what you want to do with your coveted skillset comprising math, statistics, and computer science, then all you need to do is hone the specific combination that will make you a name to reckon with in the field of data science or data engineering.

To read more informative posts about data science and machine learning, go here.