Introduction

Data classification is a very important task in machine learning.Support Vector Machines (SVMs) are widely applied in the field of pattern classifications and nonlinear regressions. The original form of the SVM algorithm was introduced by Vladimir N. Vapnik and Alexey Ya. Chervonenkis in 1963. Since then, SVMs have been transformed tremendously to be used successfully in many real-world problemssuch as text (and hypertext) categorization,image classification,bioinformatics (Protein classification,Cancer classification), handwritten character recognition, etc.

Table of Contents

- What is a Support Vector Machine?

- How does it work?

- Derivation of SVM Equations

- Pros and Cons of SVMs

- Python and R implementation

What is a Support Vector Machine(SVM)?

A Support Vector Machine is a supervised machine learning algorithm which can be used for both classification and regression problems. It follows a technique called the kernel trick to transform the data and based on these transformations, it finds an optimal boundary between the possible outputs.

In simple words, it does some extremely complex data transformations to figure out how to separate the data based on the labels or outputs defined.We will be looking only at the SVM classification algorithm in this article.

How does it work?

The main idea is to identify the optimal separating hyperplane which maximizes the margin of the training data. Let us understand this objective term by term.

What is a separating hyperplane?

We can see that it is possible to separate the data given in the plot above. For instance, we can draw a line in which all the points above the line are green and the ones below the line are red. Such a line is said to be a separating hyperplane.

Now the obvious confusion, why is it called a hyperplane if it is a line?

In the diagram above, we have considered the simplest of examples, i.e., the dataset lies in the 2-dimensional plane(R2). But the support vector machine can work for a general n-dimensional dataset too. And in the case of higher dimensions, thehyperplane is the generalization of a plane.

More formally, it is an n-1 dimensional subspace of an n-dimensional Euclidean space. So for a

- 1D dataset, a single point represents the hyperplane.

- 2D dataset, a line is a hyperplane.

- 3D dataset, a plane is a hyperplane.

- And in the higher dimension, it is called a hyperplane.

We have said that the objective of an SVM is to find the optimal separating hyperplane. When is a separating hyperplane said to be optimal?

The fact that there exists a hyperplane separating the dataset doesn’t mean that it is the best one.

Let us understand the optimal hyperplane through a set of diagrams.

- Multiple hyperplanes

There are multiple hyperplanes, but which one of them is a separating hyperplane? It can be easily seen that line B is the one which best separates the two classes.

- Multiple separating hyperplanes

There can be multiple separating as well. How do wefind the optimal one? Intuitively, if we select a hyperplane which is close to the data points of one class, then it might not generalize well. So the aim is to choose the hyperplane which is as far as possible from the data points of each category.

- In the diagram above, the hyperplane that meets the specified criteria for the optimal hyperplane is B.

Therefore, maximizing the distance between the nearest points of each class and the hyperplane would result in an optimal separating hyperplane. This distance is called the margin.

The goal of SVMs is to find the optimal hyperplane because it not only classifies the existing dataset but also helps predict the class of the unseen data. And the optimal hyperplane is the one which has the biggest margin.

Mathematical Setup

Now that we have understood the basic setup of this algorithm, let us dive straight into the mathematical technicalities of SVMs.

I will be assuming you are familiar withbasic mathematical concepts such as vectors, vector arithmetic(addition, subtraction, dot product) and the orthogonal projection. Some of these concepts can also be found in the article, Prerequisites of linear algebra for machine learning.

Equation of Hyperplane

You musthave come across the equation of a straight line as y=mx+c, where m is the slope and cis the y-intercept of the line.

The generalized equation of a hyperplane is as follows:

wTx=0

Here w and x are the vectors and wTx represents the dot product of the two vectors. The vector w is often called as the weight vector.

Consider the equation of the line as y−mx−c=0.In this case,

w=⎛⎝⎜−c−m1⎞⎠⎟ and x=⎛⎝⎜1xy⎞⎠⎟

wTx=−c×1−m×x+y=y−mx−c=0

It is just two different ways of representing the same thing. So why do we use wTx=0? Simply because it is easier to deal with this representation in thecase of higher dimensional dataset and w represents the vector which is normal to the hyperplane. This property will be useful once we start computing the distance from a point to the hyperplane.

Understanding the constraints

The training data in our classification problem is of the form {(x1,y1),(x2,y2),…,(xn,yn)}∈Rn×−1,1. This means that the training dataset is a pair of xi, an n-dimensional feature vector and yi, the label of xi. When yi=1 implies that the sample with the feature vector xi belongs to class 1 and if yi=−1 implies that the sample belongs to class -1.

In a classification problem, we thus try to find out a function, y=f(x):Rn⟶{−1,1}. f(x) learns from the training data set and then applies its knowledge to classify the unseen data.

There are an infinite number of functions, f(x) that can exist, so we have to restrict the class of functions that we are dealing with. In thecase of SVM’s, this class of functions is that of the hyperplanerepresented as wTx=0.

It can also be represented as w⃗ .x⃗ +b=0;w⃗ ∈Rn and b∈R

This divides the input space into two parts, one containing vectors of class ?1 and the other containing vectors of class +1.

For the rest of this article, we will consider 2-dimensional vectors. Let H0 be a hyperplane separating the dataset and satisfying the following:

w⃗ .x⃗ +b=0

Along with H0, we can select two others hyperplanes H1 and H2 such that they also separate the data and have the following equations:

w⃗ .x⃗ +b=δ and w⃗ .x⃗ +b=-δ

This makes Ho equidistant from H1 as well as H2.

The variable ? is not necessary so we can set ?=1 to simplify the problem as w⃗ .x⃗ +b=1 and w⃗ .x⃗ +b=-1

Next, we want to ensure that there is no point between them. So for this, we will select only those hyperplanes which satisfy the following constraints:

For every vector xieither:

- w⃗ .x⃗ +b≤-1 for xi having the class ?1 or

- w⃗ .x⃗ +b≥1 for xi having the class 1

Combining the constraints

Both the constraints stated above can be combined into a single constraint.

Constraint 1:

For xi having the class -1, w⃗ .x⃗ +b≤-1

Multiplying both sides by yi (which is always -1 for this equation)

yi(w⃗ .x⃗ +b)≥yi(−1) which implies yi(w⃗ .x⃗ +b)≥1 for xi having the class?1.

Constraint 2:yi=1

yi(w⃗ .x⃗ +b)≥1 for xi having the class 1

Combining both the above equations, we get yi(w⃗ .x⃗ +b)≥1 for all 1≤i≤n

This leads to a unique constraint instead of two which are mathematically equivalent. The combined new constraint also has the same effect, i.e., no points between the two hyperplanes.

Maximize the margin

For the sake of simplicity, we will skip the derivation of the formula for calculating the margin, m which is

m=2||w⃗ ||

The only variable in this formula is w, which is indirectly proportional to m, hence to maximize the margin we will have to minimize ||w⃗ ||. This leads to the following optimization problem:

Minimize in (w⃗ ,b){||w⃗ ||22 subject to yi(w⃗ .x⃗ +b)≥1 for any i=1,…,n

The above is the case when our data is linearly separable. There are many cases where the data can not be perfectly classified through linear separation. In such cases, Support Vector Machine looks for the hyperplane that maximizes the margin and minimizes the misclassifications.

For this, we introduce the slack variable,ζi which allows some objects to fall off the margin but it penalizes them.

In this scenario, the algorithm tries to maintain the slack variable to zero while maximizing the margin. However, it minimizes the sum of distances of the misclassification from the margin hyperplanes and not the number of misclassifications.

Constraints now changes to yi(w⃗ .xi→+b)≥1−ζi for all 1≤i≤n,ζi≥0

and the optimization problem changes to

Minimize in (w⃗ ,b){||w⃗ ||22+C∑iζi subject to yi(w⃗ .x⃗ +b)≥1−ζi for any i=1,…,n

Here, the parameter C is the regularization parameter that controls the trade-off between the slack variable penalty (misclassifications) and width of the margin.

- Small C makes the constraints easy to ignore which leads to a large margin.

- Large C allows the constraints hard to be ignored which leads to a small margin.

- For C=inf, all the constraints are enforced.

The easiest way to separate two classes of data is a line in case of 2D data and a plane in case of 3D data. But it is not always possible to use lines or planes and one requires a nonlinear region to separate these classes. Support Vector Machines handle such situations by using a kernel function which maps the data to a different space where a linear hyperplane can be used to separate classes. This is known as thekernel trick where the kernel function transforms the data into the higher dimensional feature space so that a linear separation is possible.

If ϕ is the kernel function which maps xito ϕ(xi), the constraints change toyi(w⃗ .ϕ(xi)+b)≥1−ζi for all 1≤i≤n,ζi≥0

And the optimization problem is

Minimize in (w⃗ ,b){||w⃗ ||22+C∑iζi subject to yi(w⃗ .ϕ(xi)+b)≥1−ζi for all 1≤i≤n,ζi≥0

We will not get into the solution of these optimization problems. The most common method used to solve these optimization problems is Convex Optimization.

Pros and Cons of Support Vector Machines

Every classification algorithm has its own advantages and disadvantages that are come into play according to the dataset being analyzed. Some of the advantages of SVMs are as follows:

- The very nature of the Convex Optimization method ensures guaranteed optimality. The solution is guaranteed to be a global minimum and not a local minimum.

- SVMis an algorithm which is suitable for both linearly and nonlinearly separable data (using kernel trick). The only thing to do is to come up with the regularization term, C.

- SVMswork well on small as well as high dimensional data spaces. It works effectively for high-dimensional datasets because of the fact that the complexity of the training dataset in SVM is generally characterized by the number of support vectors rather than the dimensionality. Even if all other training examples are removed and the training is repeated, we will get the same optimal separating hyperplane.

- SVMscan work effectively on smaller training datasets as they don’trely on the entire data.

Disadvantages of SVMs are as follows:

- Theyarenot suitable for larger datasets because the training time with SVMs can be high and much more computationally intensive.

- They areless effective on noisier datasets that have overlapping classes.

SVM with Python and R

Let us look at the libraries and functions used to implement SVM in Python and R.

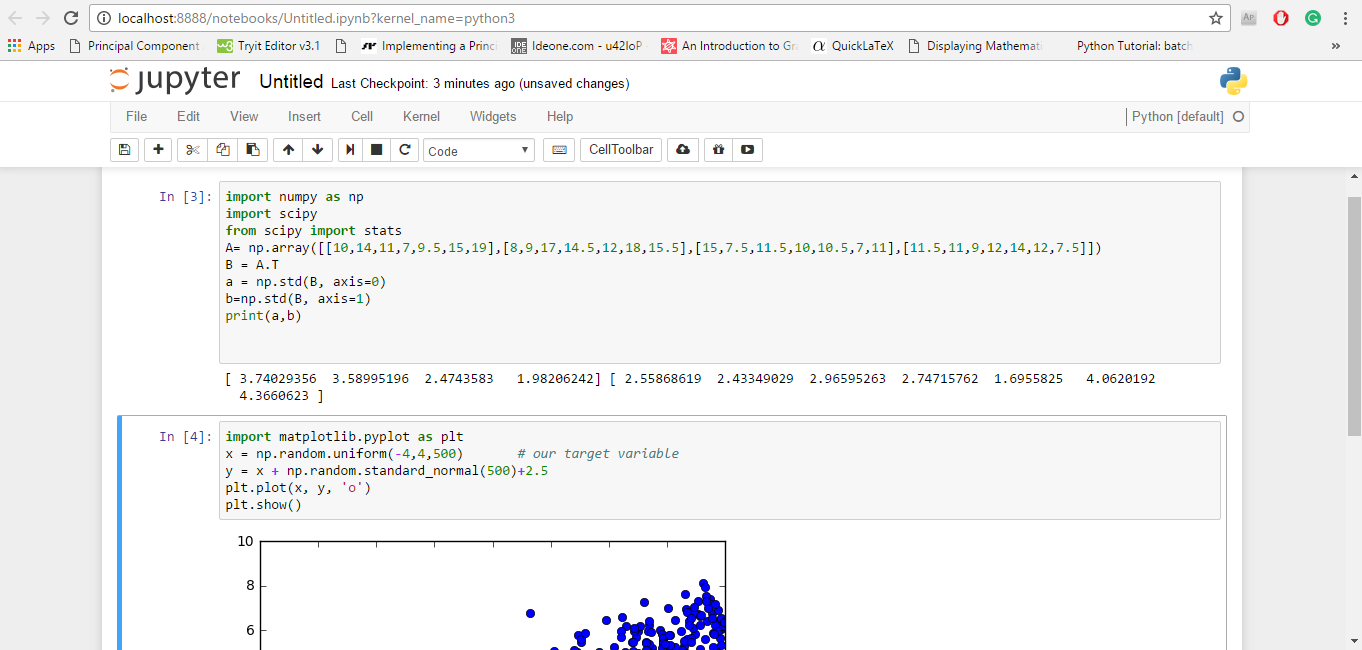

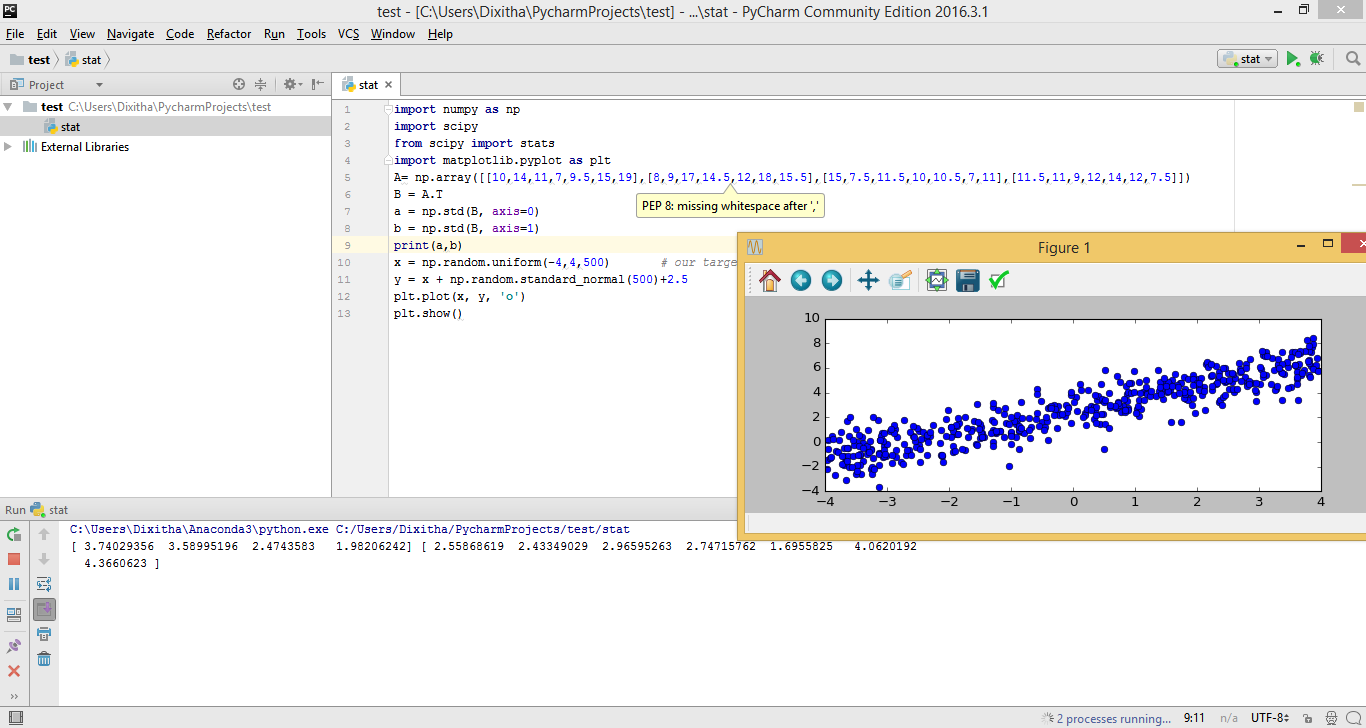

Python Implementation

The most widely used library for implementing machine learning algorithms in Python is scikit-learn. The class used for SVMclassification in scikit-learn issvm.SVC()

sklearn.svm.SVC (C=1.0, kernel=’rbf’, degree=3, gamma=’auto’)

Parameters are as follows:

- C: It is the regularization parameter, C, of the error term.

- kernel: It specifies the kernel type to be used in the algorithm. It can be ‘linear’, ‘poly’, ‘rbf’, ‘sigmoid’, ‘precomputed’, or a callable. The default value is ‘rbf’.

- degree: It is the degree of the polynomial kernel function (‘poly’) and is ignored by all other kernels. The default valueis 3.

- gamma: It is the kernel coefficient for ‘rbf’, ‘poly’, and ‘sigmoid’. If gamma is ‘auto’, then 1/n_features will be used instead.

There are many advanced parameters too which I have not discussed here. You can check them outhere.

https://gist.github.com/HackerEarthBlog/07492b3da67a2eb0ee8308da60bf40d9

One can tune the SVM by changing the parameters C,γ and the kernel function. The function for tuning the parameters available in scikit-learn is called gridSearchCV().

sklearn.model_selection.GridSearchCV(estimator, param_grid)

Parameters of this function are defined as:

- estimator: It is theestimator object which is svm.SVC() in our case.

- param_grid: It is the dictionary or list with parameters names (string) as keys and lists of parameter settings to try as values.

To know more about other parameters of GridSearch.CV(), click here.

https://gist.github.com/HackerEarthBlog/a84a446810494d4ca0c178e864ab2391

In the above code, the parameters we have considered for tuning are kernel, C, and gamma. The values from which the best value is to be are the ones written in the bracket. Here, we have only given a few values to be considered but a whole range of values can be given for tuning but it will take a longer time for execution.

R Implementation

The package that we will use for implementing SVM algorithm in R is e1071. The function used will be svm().

https://gist.github.com/HackerEarthBlog/0336338c5d93dc3d724a8edb67ad0a05

Summary

Inthis article, Ihave gone through a very basic explanation of SVM classification algorithm. I have left outa few mathematical complications such as calculating distances and solving the optimization problem. But I hope this gives you enough know-how abouthow a machine learning algorithm, that is,SVM, can be modified based on the type of dataset provided.